In November I led the launch of beehiiv’s first AI agent, an assistant that could help you build your website using beehiiv’s web builder.

As a non-technical PM, leading this project resulted in a pretty steep learning curve and there were a ton of concepts and definitions I wish I’d known beforehand.

So… if you’re considering building your own agent, here are all the things I wish I’d understood before I started.

Here’s what we’ll cover:

What is an agent

Understanding the role of the Orchestrator

The role of Context

3 ways to optimize your agent and token usage

What are Evals and how to do them

What makes building AI features different from normal features?

If you’re used to building products, you will be familiar with ‘deterministic’ systems (you build it, you test it, and it generates the exact same output every single time).

The main concept you need to become comfortable with when working with AI is that the system you’re building is ‘probabilistic’ systems (depending on the context and prompt, it generates the most likely output each time, which can vary significantly).

As a result, you lose a lot of the control that you’re typically used to and the experience for your users can be much more varied (and much harder to troubleshoot).

What is an agent?

The first thing to understand is what is the difference between the traditional ‘chatbot’ (eg the first edition of chatgpt) and an Agent?

Traditional chatbot (Original ChatGPT)

Input (Your Prompt) → LLM (Processing) → Output (Response)

The key point is that the model gets one pass at getting the answer. If it starts a sentence wrong, it will keep going to make it sound correct. This is why hallucinations are so common.

Agent

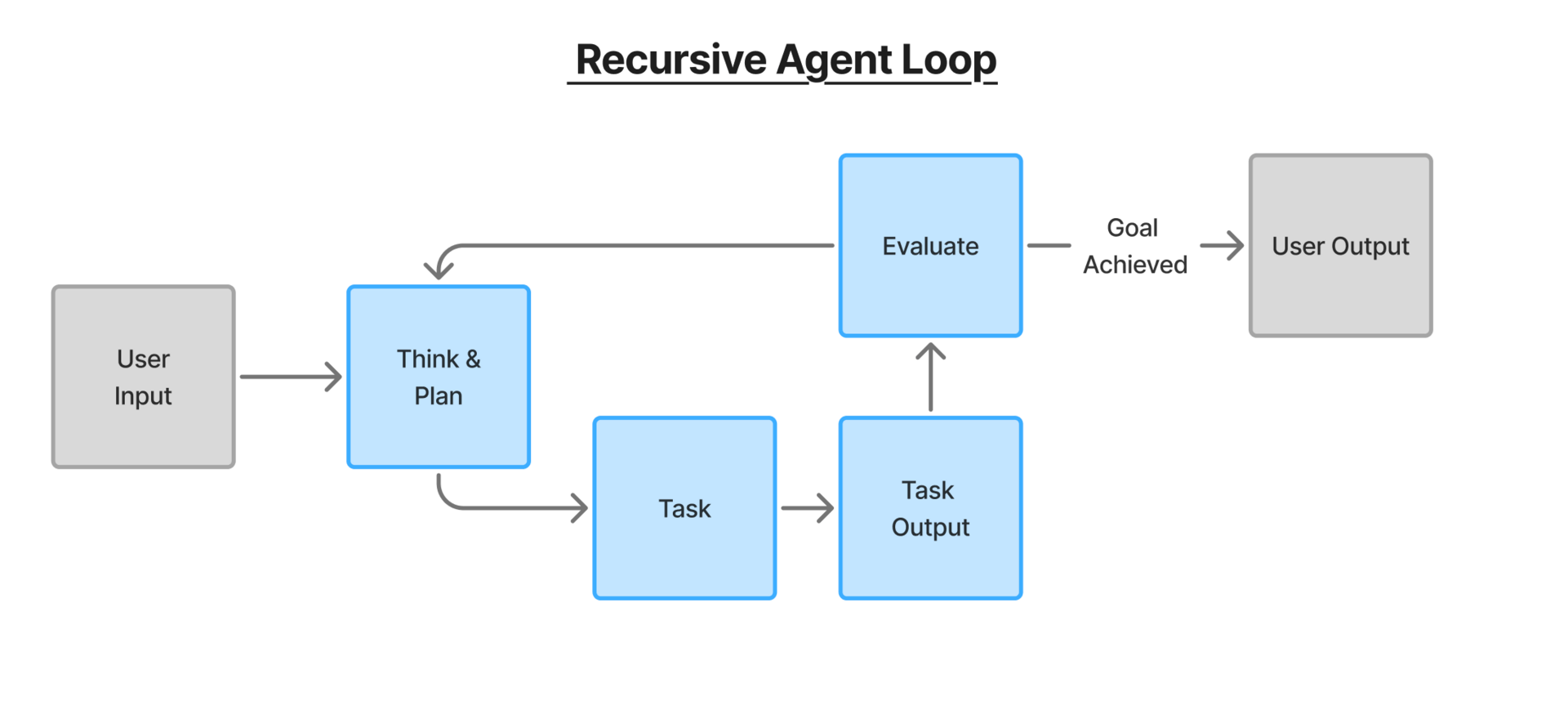

This is often referred to as a recursive or iterative loop. If the agent sees it has given the incorrect / incomplete answer, then it can try again and correct itself.

In reality, all modern-day AI chatbots experiences (including chatgpt) are now ‘agentic’, and in most cases they are actually a combination of specialist agents that do specific tasks (eg research agent, writing agent, image creation agent etc).

Input

How your users interface with the agent. In 90% of cases today (including for our website builder agent below) this is via a chat interface. Other increasingly common mediums are “/commands” and “@agent” in apps like slack.

Introducing the ‘orchestrator’ agent

In the Agent flow above, I introduced the concept of a planning step. This is one of the key jobs of an Orchestrator.

Your Orchestrator has 4 roles in your system:

Planning - It breaks down your complex request into a simple list of tasks that need to be completed in a certain order. If you

Routing - In a system with multiple agents (eg research agent, image analysis agent, writing agent), the orchestrator acts as the manager and assigns the individual tasks to the best “worker” agent

State Management - This is where memory is stored. As the agent loops through the tasks. The Orchestrator keeps track of what has been learnt & completed so far (this is called “Maintaining the State”). This means allows the writer agent to be aware of the findings of the research agent.

Evaluation & Flow control - In its manager role, the orchestrator will inspect the output of each step. It may pass the work on to the next step/agent or get the original agent to repeat the task if it did not meet the requirements. It will also adjust the plan/tasks if required.

Here’s a practical example for our website builder:

User A uploads screenshot of a website and says “copy this hero section for my site”

Orchestrator would create the following tasks and assign them accordingly. At each step it will check if this is complete or not.

Analyze screenshot - assigned to an image analysis agent we created

O: is there a clear description of how the site in image is structured and styled? if not, try again.

Translate the layout described in the screenshot to how we build pages in the builder

O: Are there clear instructions on what is required and do these match the description in the previous step? if not, try again.

Add the sections, containers and elements to replicate the layout

O: Has the layout been replicated as described? If not, make improvements

replicate the styling described

O: Has the styling been replicated as described? If not, make improvements

Context

What is hopefully becoming evident is that for specialised agents to deliver an accurate output inside your product, they need to have a really deep understanding of the domain, the user and the product itself. This is what we call Context.

Context for AI is no different to the context you need to make any decision in work or life, and generally speaking the more context the better.

Based on my experience with our web builder agent, improving context is where you will spend the most of your time.

Here are some examples of the types of context that we provide to our agent to try and maximise the likelihood of an accurate response:

info about your beehiiv publication including themes, styling, category, tone of voice etc

info about how the technology in our website builder uses works (which tools we use) eg. Standard web elements like Headers, images, text, buttons

Info about any custom features beehiiv has built on top of the tools we use (eg synced newsletter carousels)

info about what content exists across the rest of the website (eg home page, pricing page etc)

info about what content is already on the page that is being built/edited

conversation history

and much more….

As you can see, it’s easy for context to grow very large very quickly, and while more context typically delivers a more accurate result, there are downsides.

Each time you start a conversation with the agent, the Agent has to read and understand all of the context before it can begin to make a plan and perform the desired action. As a result, more context often results in less speed.

From a product perspective this is one of the toughest parts: you need to constantly weigh up the tradeoff between speed and accuracy to find the goldilocks zone for your specific use case.

There are of course ways of optimizing context…

Crash course on context windows, token usage and Prompt Caching.

Simply put, the context window is the physical capacity of your AI’s short term memory. It governs how many tokens (4 characters ≈ 1 token) it can handle at once.

The more messages you send and the more loops your agent does, the more full it gets and the more it costs you (you are charged per token), and the slower your agent gets (it needs to re-read the context every time you ask a new question.

The key to minimising token usage:

File format: Some file formats use more characters than others, eg. word (4 characters) vs {“word”} (8 characters).

If possible, use token-efficient file formats when passing context to AI. Minimising the use of unnecessary characters can reduce token usage significantly.

GOAT: Markdown / .md files (up to 80% less tokens than HTML)

Good: YAML

OK: JSON (This is the language of APIs so often you don’t have a choice)

Bad: HTML

Prompt Caching (PC): rather than re-reading the entire context every time there’s a question, PC allows you to temporarily freeze and remember a specific part of the context so that it doesn’t need to re-read it every time.

How this works: when the next question comes from the user, it begins to read the context, sees that its identical to what is in the cache already, skips it and just focuses on the new information. (This is a feature of the individual LLMs and needs to be turned on)

This was particularly helpful for our web builder agent, where we had lots of context about how the website builder worked and how the agent should use the tool, which only needed to be read once.

Summarisation: When your conversation is getting long and the context is growing, you can use summarisation to turn the history into a summarised-paragraph so the agent has much less content to consume.

Evals

Short for ‘evaluations’, vals are how you know if your AI is working as intended. (This is going to be the buzzword for 2026 as everyone tries to dial in the agent performance)

Evals are just QA but for AI tools; whereas with deterministic tools, you can QA against a specific input/output combo, a different approach is required for probabilistic ones.

Given the unpredictable nature of agents, Evals serve to prevent/minimise regressions (where one new fix breaks another).

There are two camps here on how to do Evals (both are useful & a combination of both is wise)

Vibes-based Evals - Exactly what it sounds like. Using the agent for various tasks and getting a gut-feel for whether it is giving the correct response. (Easier for visual tasks like “build a hero section” or “change the button to green”)

Structured Evals - this is a more structured approach involving manually reviewing and categorising different interactions with your agent, so you can review trends over time.

(This is a great podcast on Evals if you want to learn more)

Key advice: start recording your agent data (queries, responses, outputs, errors) from day 1, so that when you’re ready to start doing Evals you have a solid base of data.

See you next week.

J